Dynamic Match Kernel with Deep Convolutional Features for Image Retrieval

Computer Vision Lab in Nankai University

Get Our Paper

Get Our Codes

The goal of CBIR is to efficiently find the most relevant images of the given query

from a huge amount of candidate corpus.

Different lines of existing retrieval frameworks calculate their search criteria with different image representing

and indexing schemes.

For representing the query and candidate images, both local features which are robust to depict low-level image contents,

and global attributes reflecting semantical meanings, are independently well exploited.

(Please see here for an informative survey by Zheng et al.)

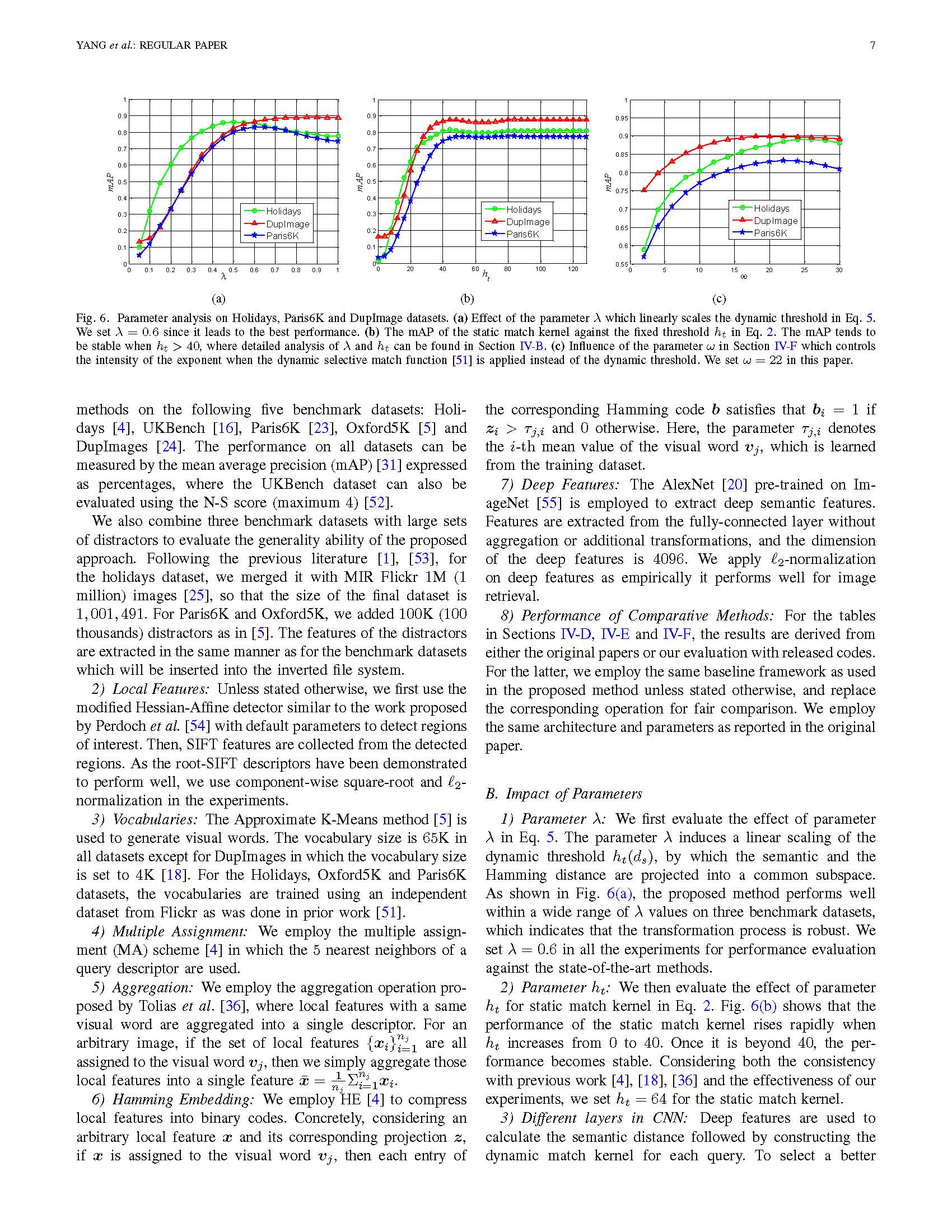

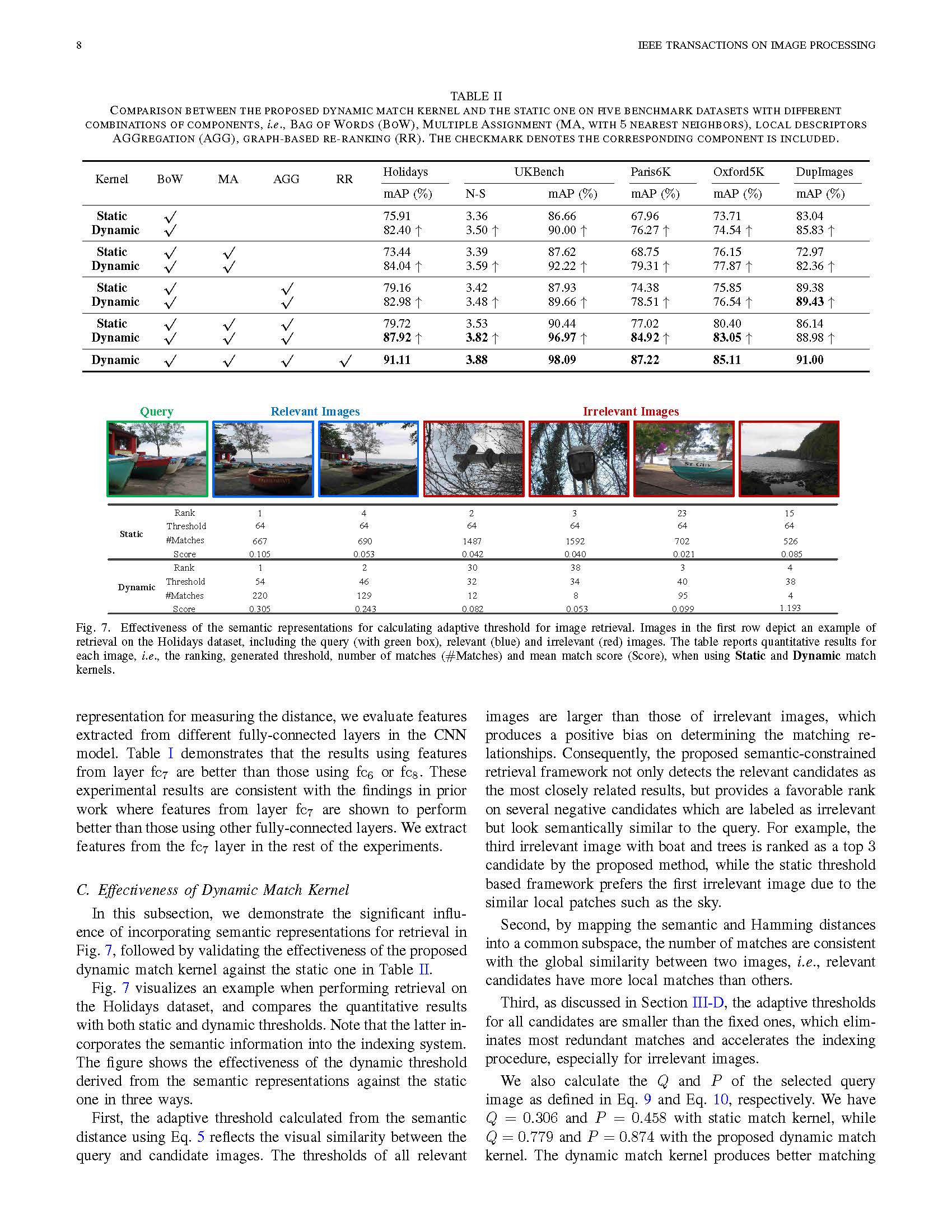

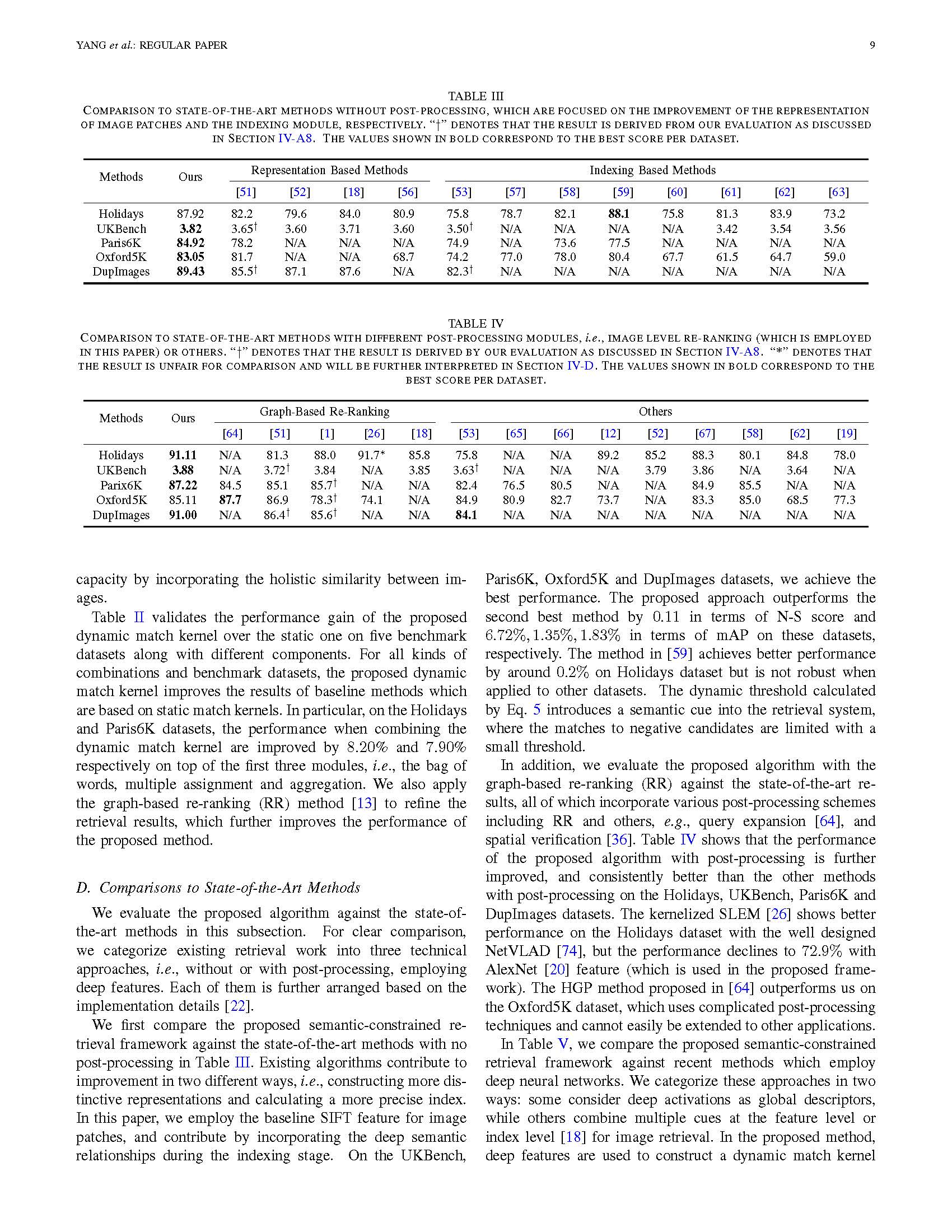

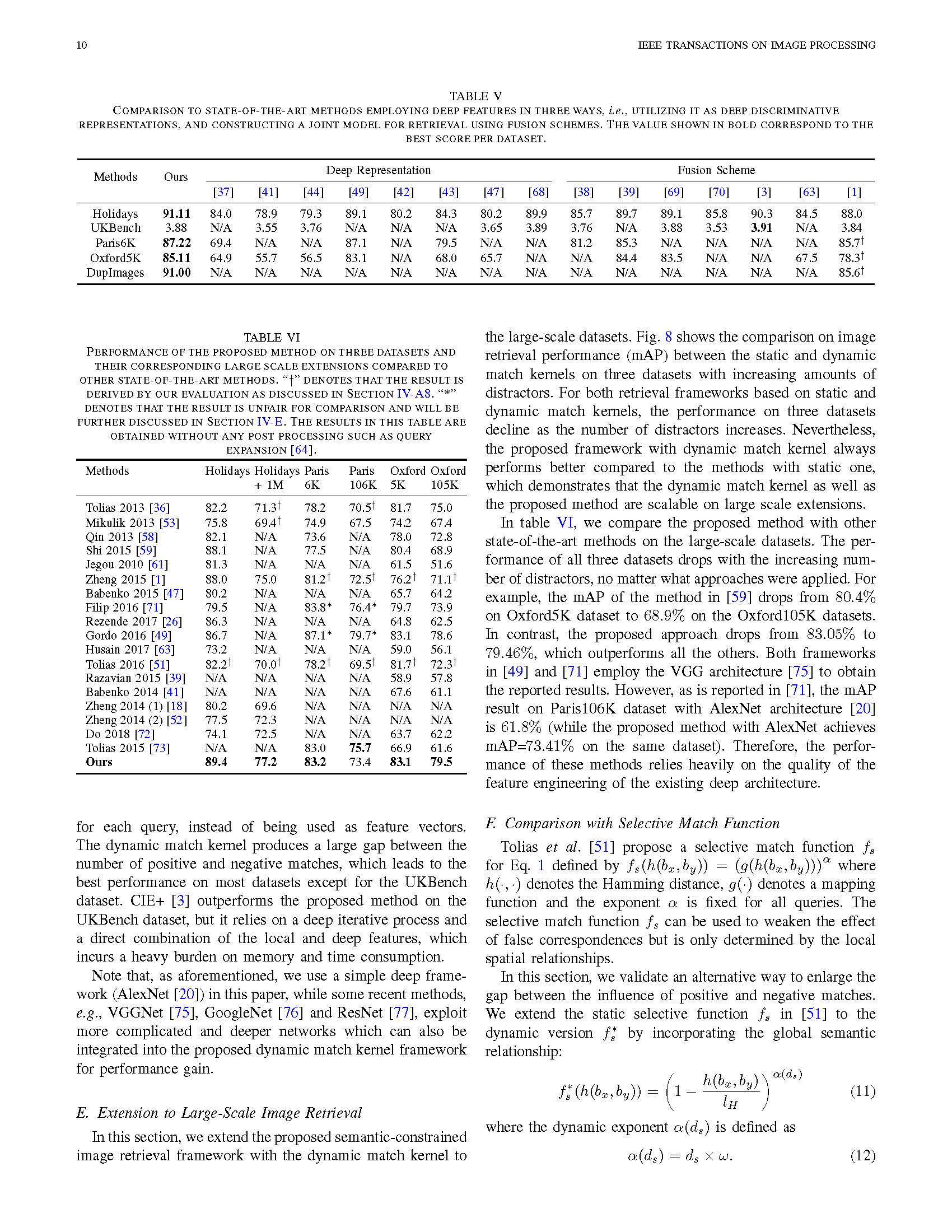

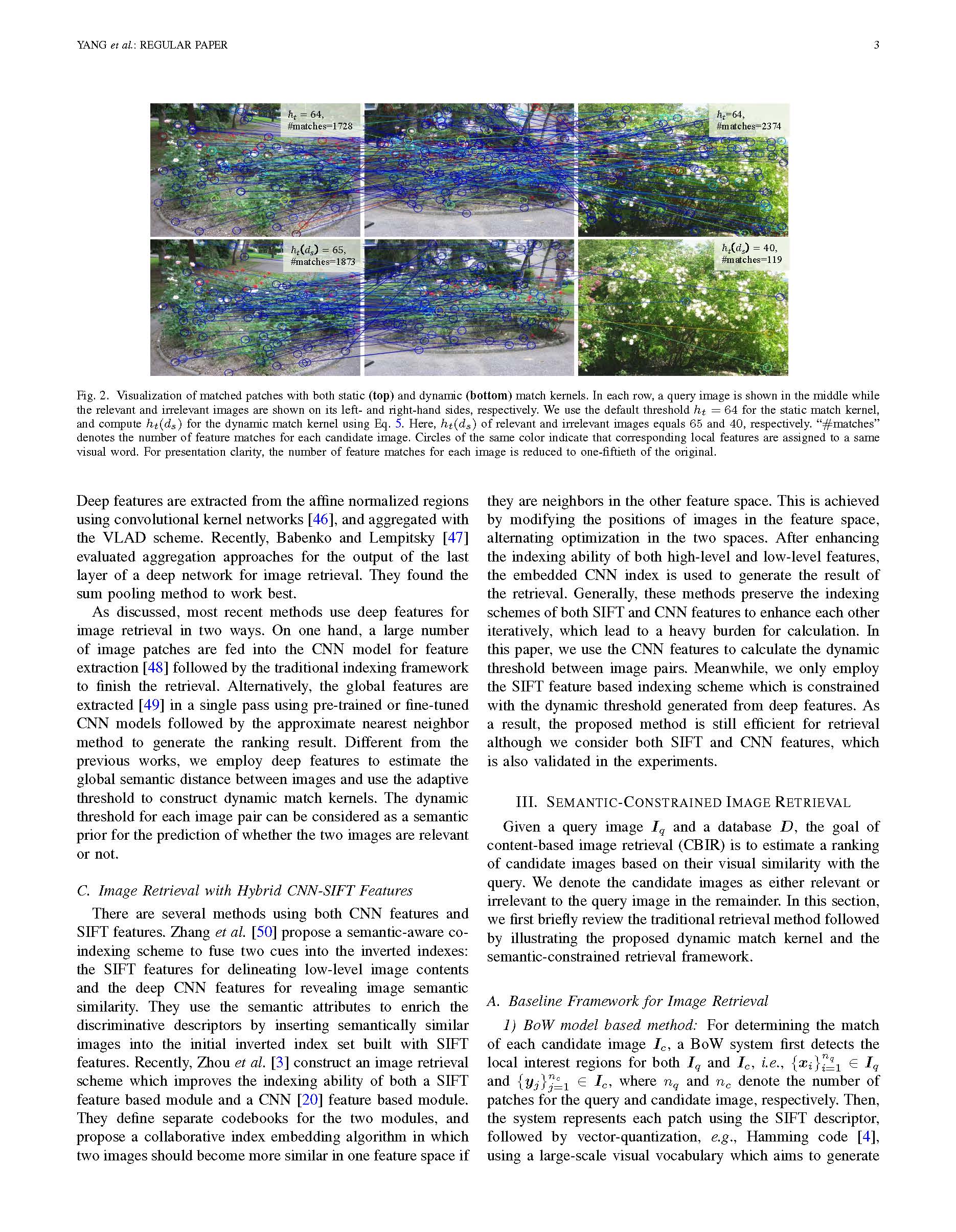

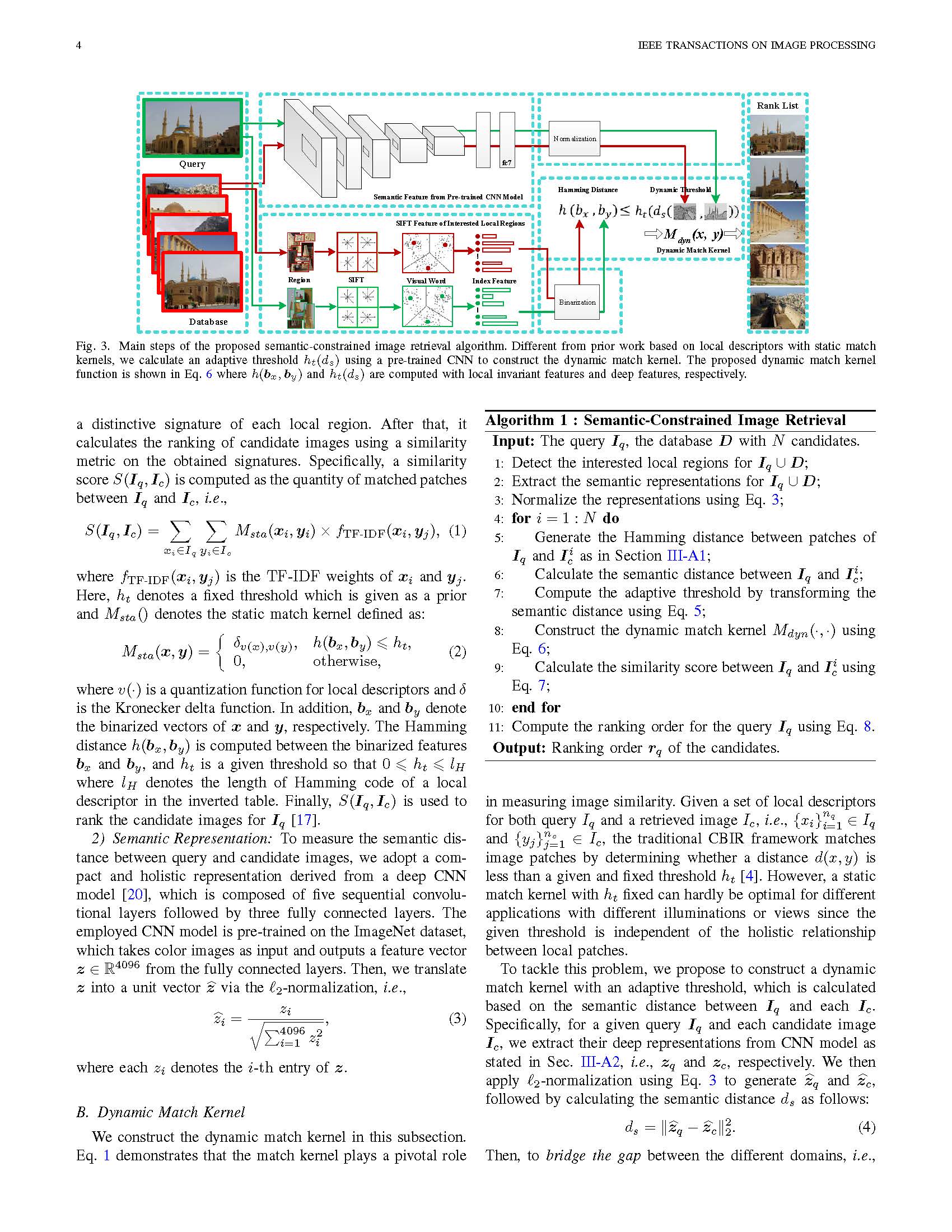

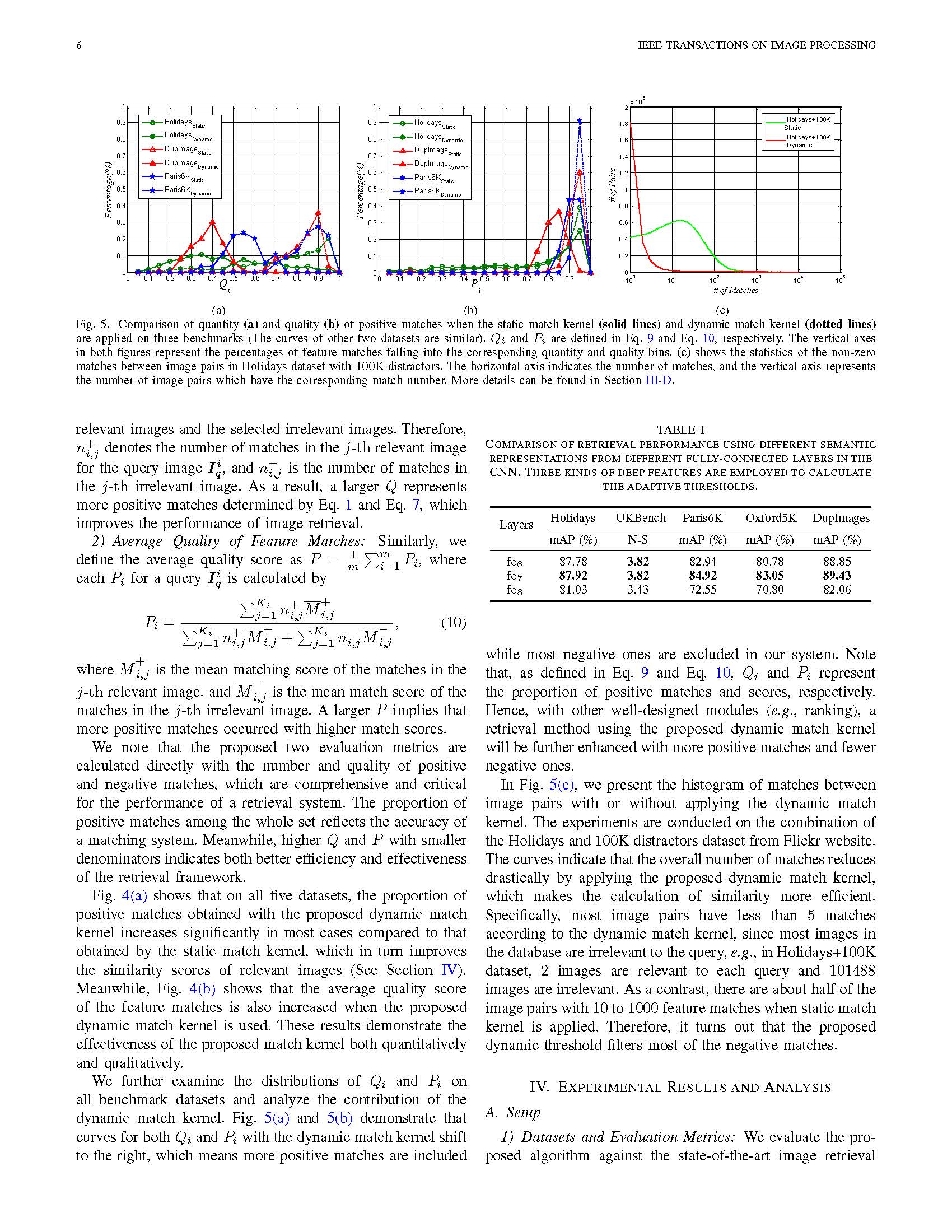

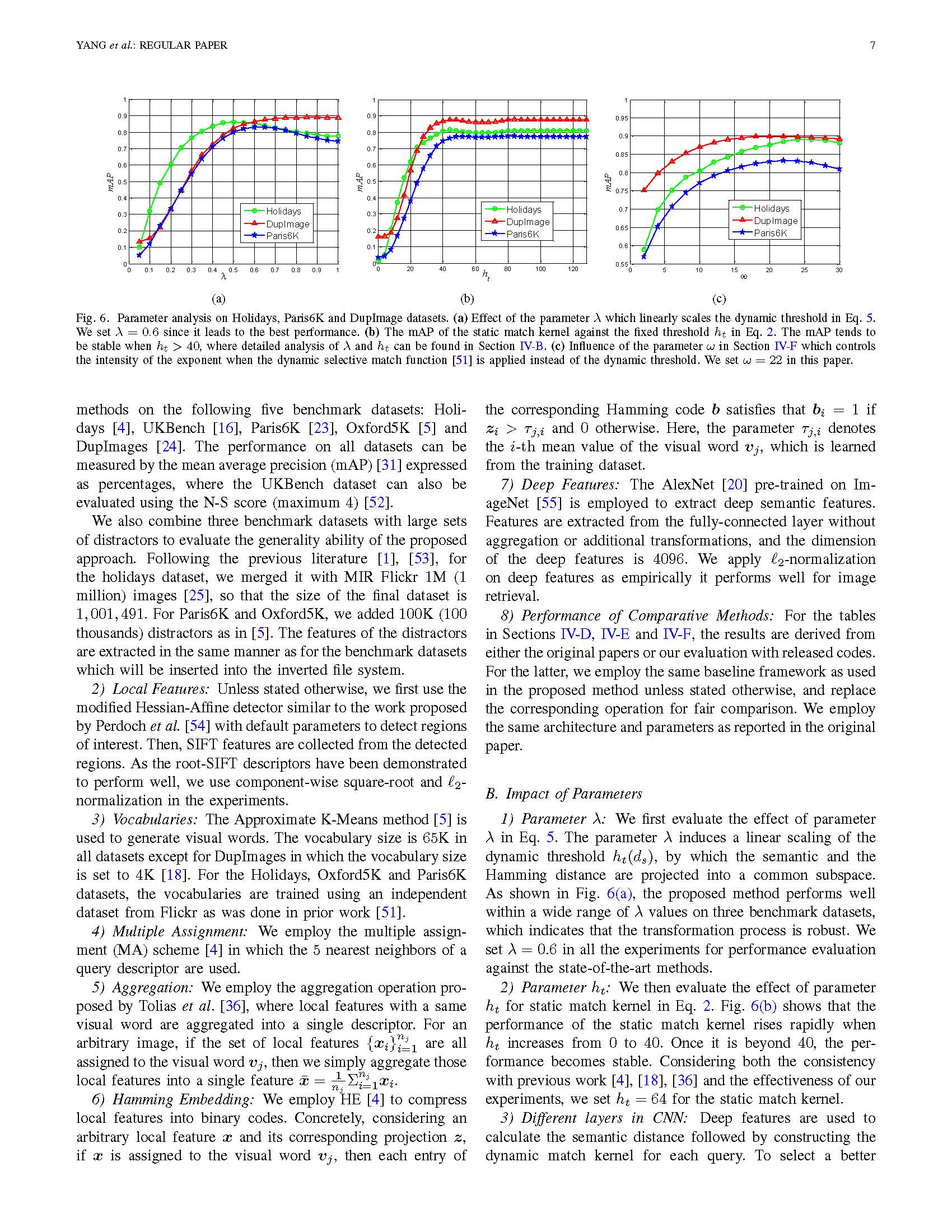

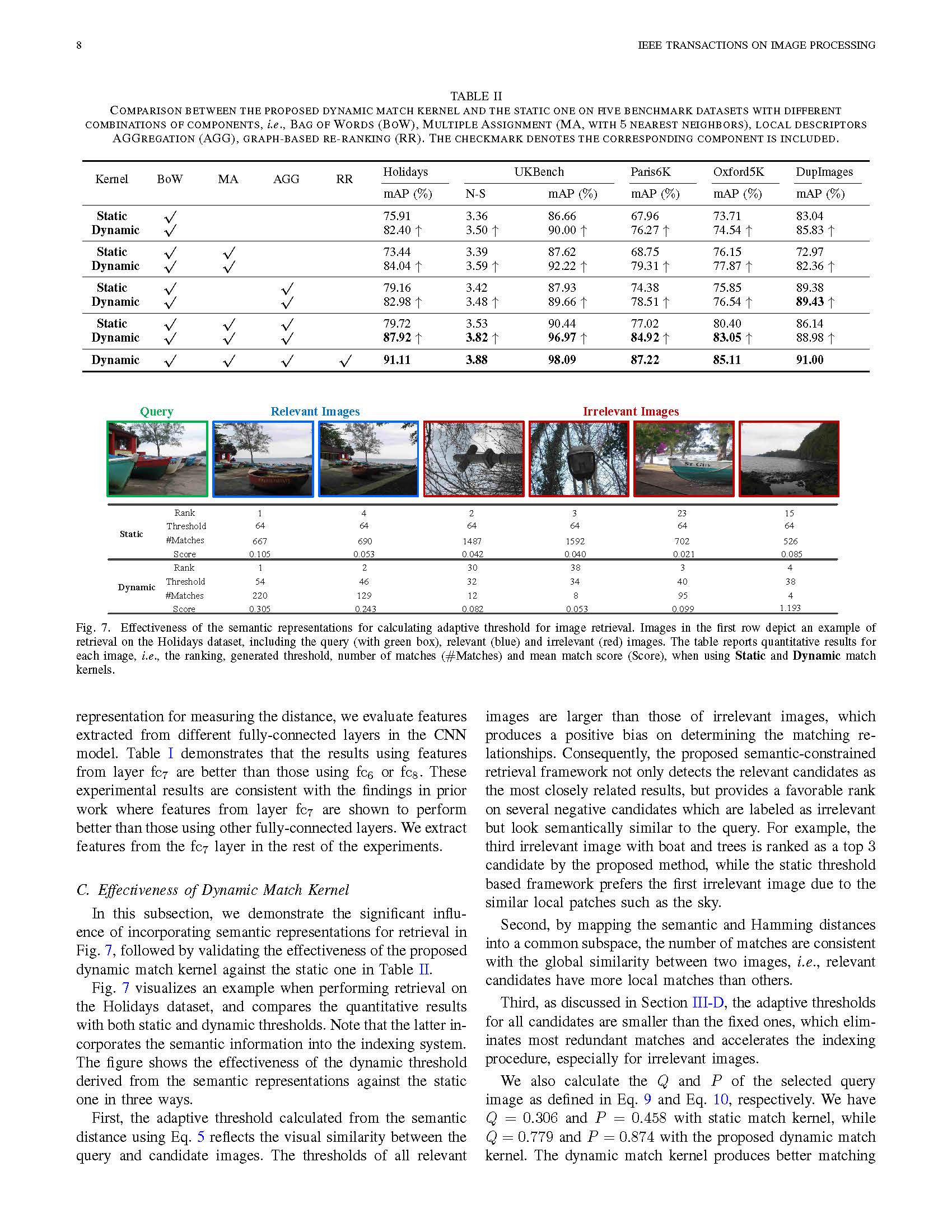

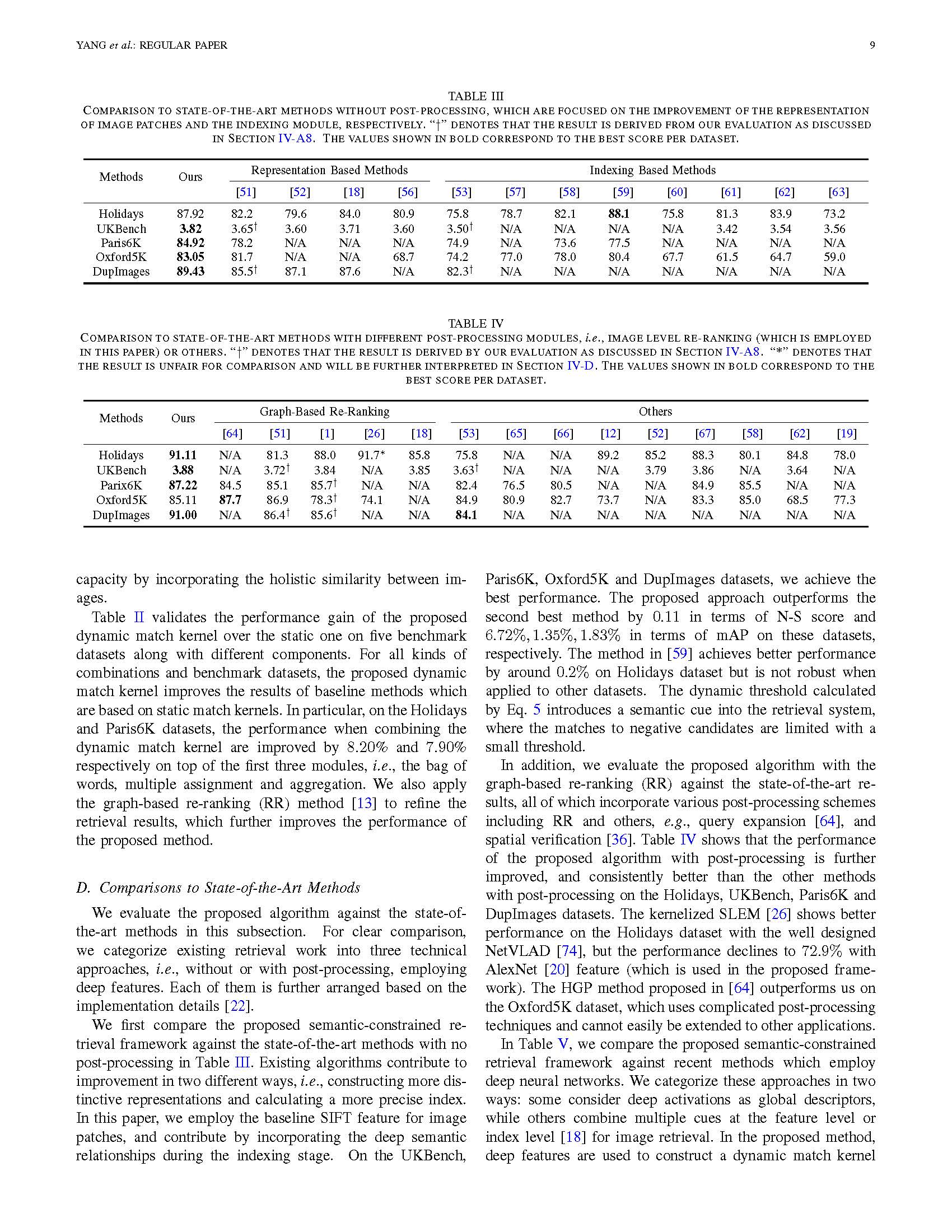

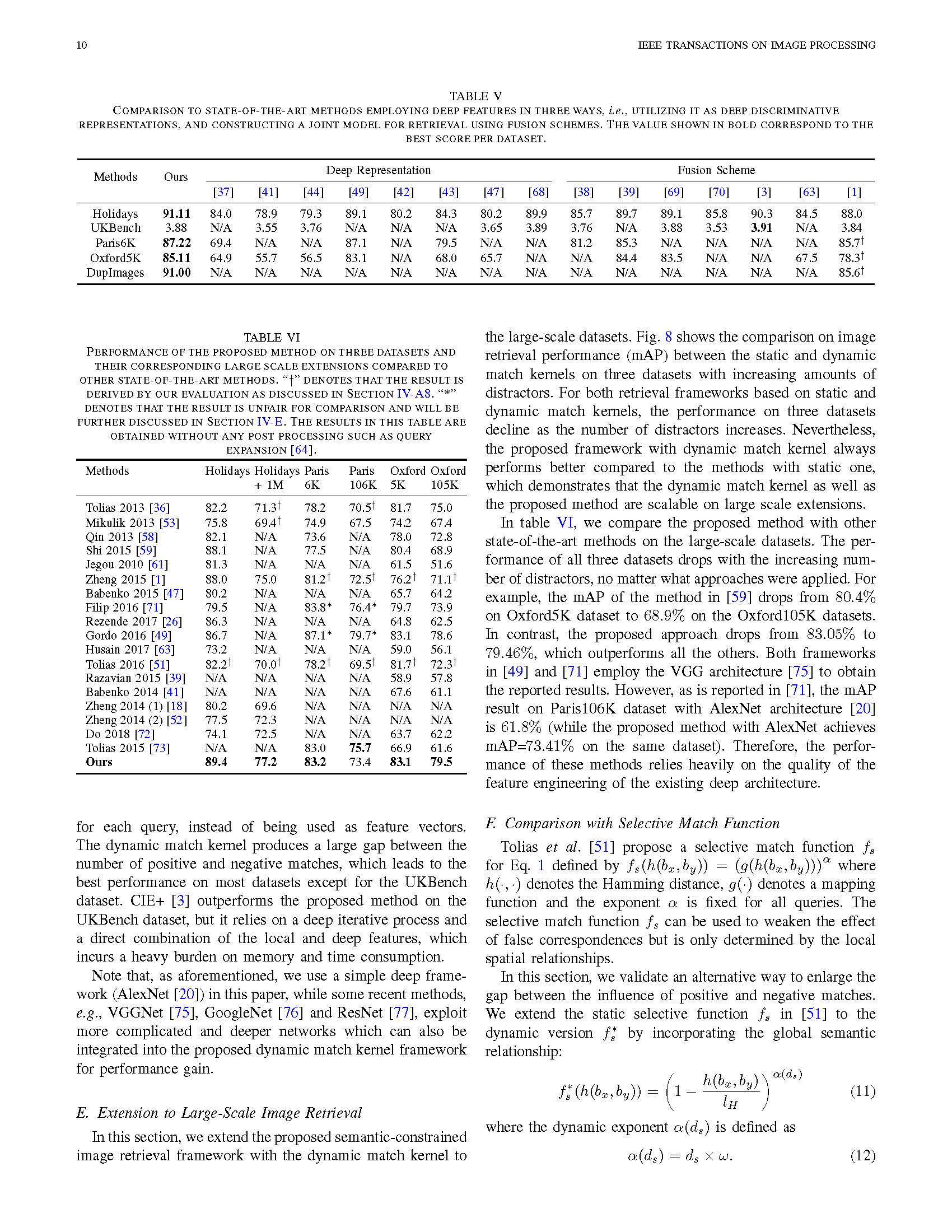

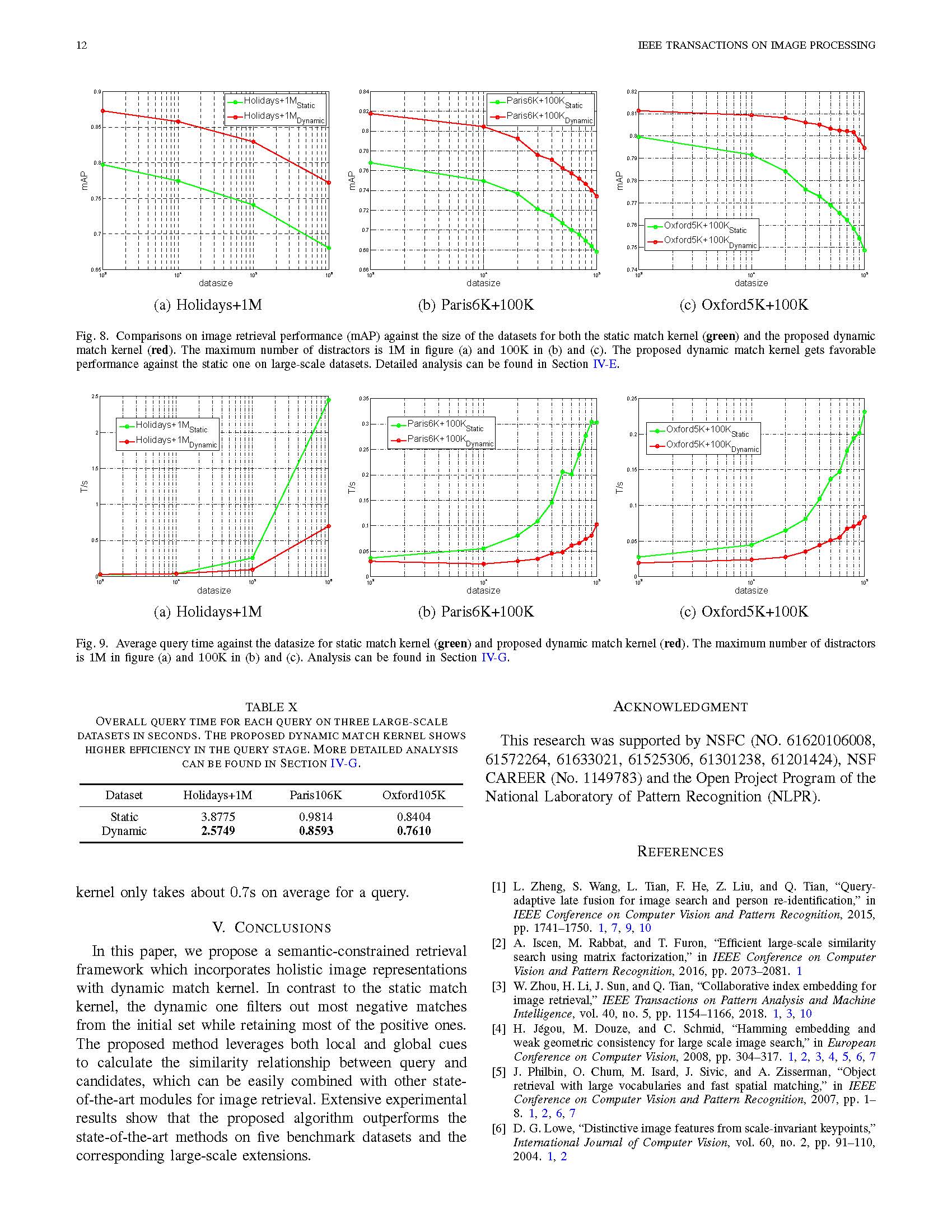

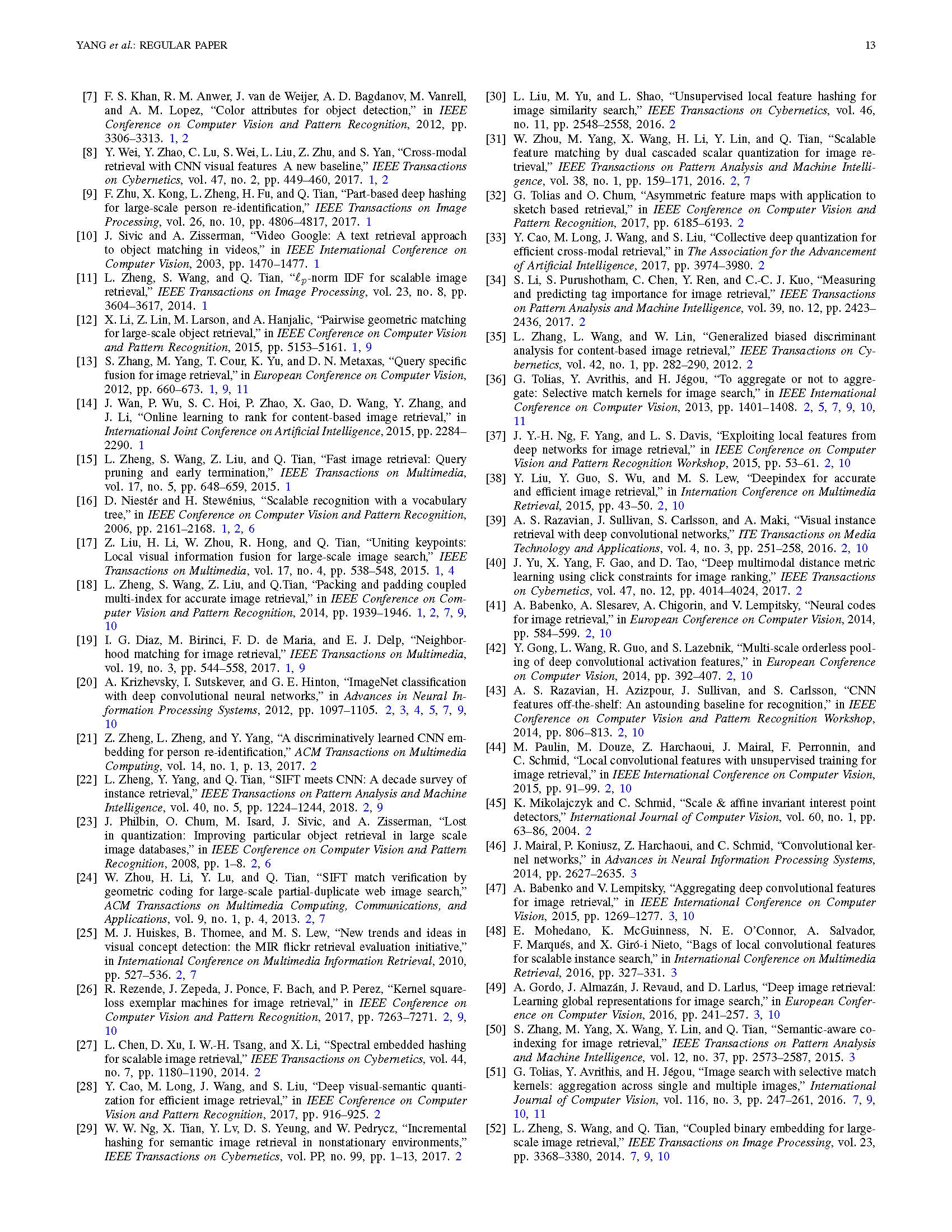

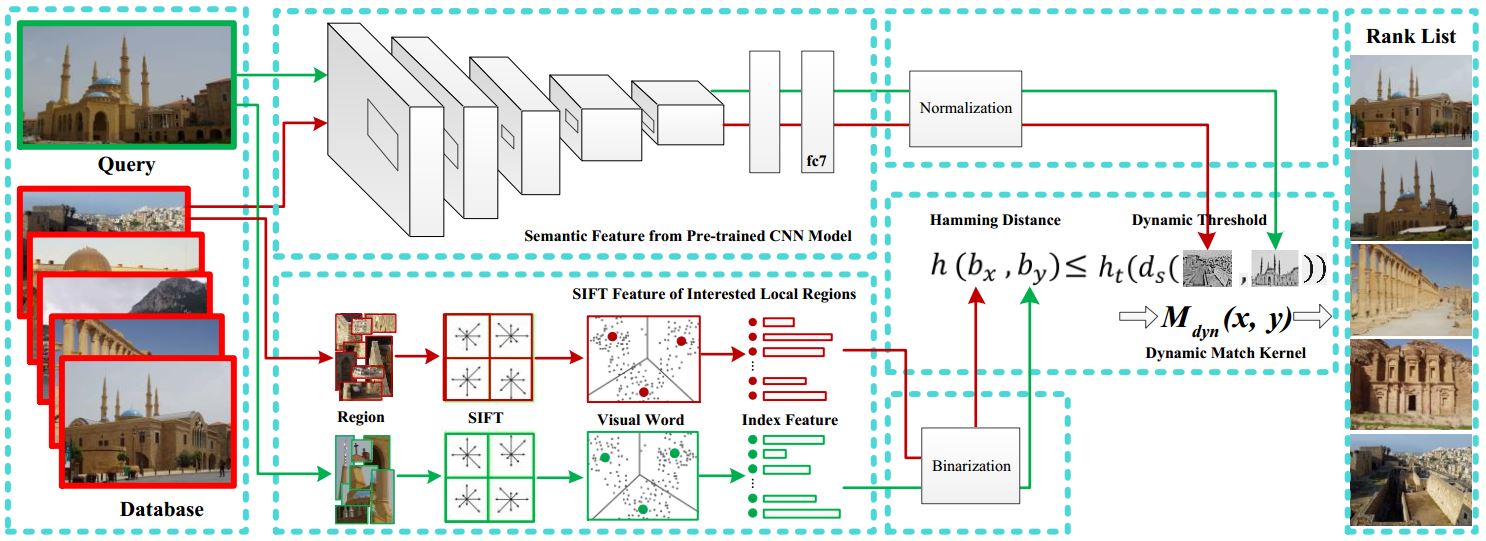

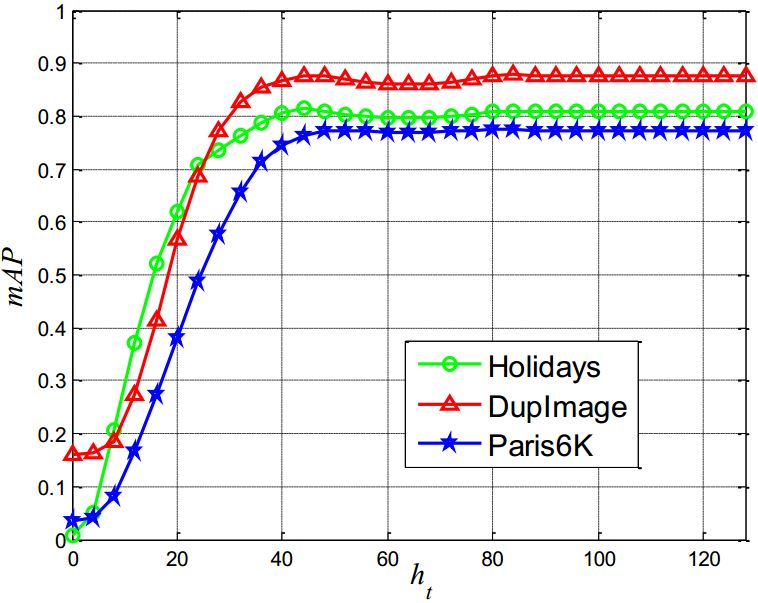

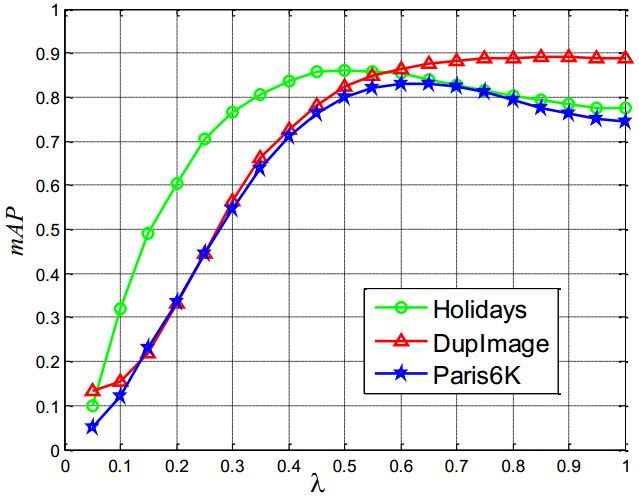

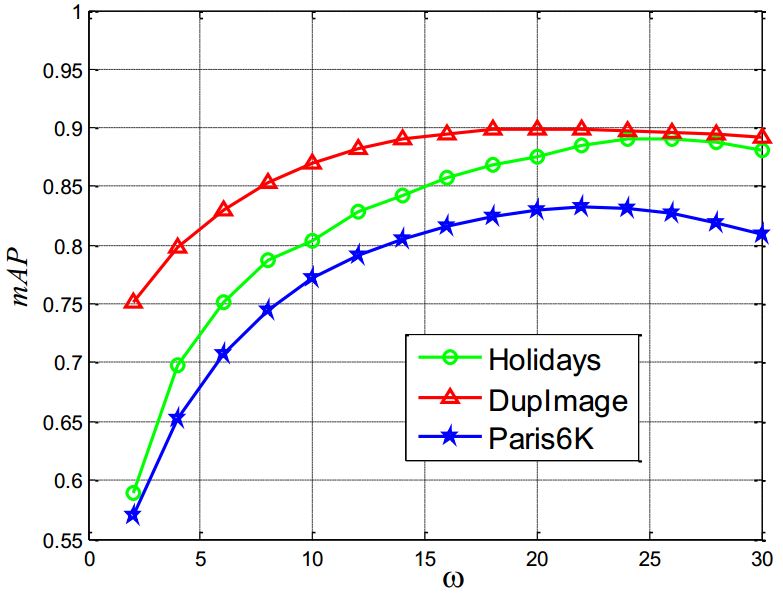

For image retrieval methods based on bag of visual words, much attention has been paid to enhancing the discriminative powers of the local features. Although retrieved images are usually similar to a query in minutiae, they may be significantly different from a semantic perspective, which can be effectively distinguished by convolutional neural networks (CNN). Such images should not be considered as relevant pairs. To tackle this problem, we propose to construct a dynamic match kernel by adaptively calculating the matching thresholds between query and candidate images based on the pairwise distance among deep CNN features. In contrast to the typical static match kernel which is independent to the global appearance of retrieved images, the dynamic one leverages the semantical similarity as a constraint for determining the matches. Accordingly, we propose a semantic-constrained retrieval framework by incorporating the dynamic match kernel, which focuses on matched patches between relevant images and filters out the ones for irrelevant pairs. Furthermore, we demonstrate that the proposed kernel complements recent methods such as Hamming embedding, multiple assignment, local descriptors aggregation and graph-based re-ranking, while it outperforms the static one under various settings on off-the-shelf evaluation metrics. We also propose to evaluate the matched patches both quantitatively and qualitatively. Extensive experiments on five benchmark datasets and large-scale distractors validate the merits of the proposed method against the state-of-the-art methods for image retrieval.

Baseline System

We construct our baseline method mainly based on THIS informative tutorial with source codes from Prof. Liang Zheng (Many thanks).

Here, we would like to give a brief summary of the baseline method in five steps.

Implementation of Dynamic Match Kernel

One can find the key implementation of this paper with detailed explanations in Github, and can find a part of the intermediate results in Google Drive or Baidu Pan. If you use our code or data in your research, please kindly cite our paper.